How do we quantify behavior

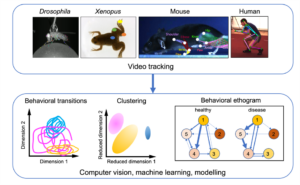

Computer vision and machine learning tools for deep behavioral analysis in animal models, healthy humans, and patients. Large datasets and deep convolutional neural networks have led to rapid advances in computer vision, opening new avenues to analyze behavior and phenotype pathologies. Multi-perspective high-resolution video data of human or animal behavior can now be processed with unprecedented fidelity and throughput, yielding rich datasets that can be used to obtain dense quantitative classifications of behavior over different timescales. With such approaches, the exact geometrical configuration of multiple, arbitrarily chosen or anatomically constrained body parts can readily be extracted, and postural epochs or disease phenotypes be classified – surpassing human annotators and reducing inherent biases.

Computer vision and machine learning tools for deep behavioral analysis in animal models, healthy humans, and patients.

Virtual reality view, camera recording, and pose reconstruction of a human participant engaged in foraging under virtual threat. Courtesy of Dr. Ulises Serratos.

Video of a walking fruit fly on an air-supported ball, under optogenetic control of a walking-initiating brain neuron. Joint angles are tracked with advanced computer vision methods in DeepLabCut. Combined with five additional multi-angle camera views, accurate and robust reconstruction of all leg joint angle kinematics is possible at high temporal resolution. Courtesy of Moritz Haustein, Büschges Lab, UoC.

3D tracking of mouse hindlimb kinematics while walking on a runaway. Stick diagram built for hip, knee, ankle and paw.

We record neuronal activity at single cell resolution from multiple species during natural behaviour

Recent years have seen tremendous breakthroughs in technology to record large populations of neurons in humans and animal models. High-density silicon probes now allow simultaneous recordings from hundreds of neurons with high temporal fidelity (e.g., Neuropixel 2.0). Breakthroughs in miniaturization of optical components and the rapid evolution of genetically encoded optical activity-sensors now also enable the use of small head-mounted 2- and 3-photon microscopes to record even larger numbers of identified neurons and subcellular structures in freely moving animals. In invertebrates, recording close to all neurons in the brain is now possible using methods like light-field imaging.

iBehave PIs are using customized benchtop 2-photon approaches (Beck, Rose, Gründemann, Grunwald Kadow, Kampa, Fuhrmann, Briggman, Kerr), as well as high-yield imaging techniques to record neuronal activity in head-restrained small insect brains (Grunwald Kadow, Schnell, Seelig, Tavosanis), and have established 3-photon technology for deep-brain single-neuron imaging (Fuhrmann, Kerr).

iBehave PIs are at the forefront of developing and using miniature head-mounted microscopes for rodent brain recording during natural behavior (Gründemann, Krabbe, Kerr, Rose). Miniaturized 2- and 3-photon microscopy spearheaded by two local PIs (Kerr, Rose) further strengthens this internationally unique focus of the network.

iBehave PIs are also at the forefront of human functional neuroimaging techniques such as mobile magnetoencephalography (MEG, Jonas, Bach), and will establish a new facility for magnetoencephalography using optically pumped magnetometers (OPMs), novel quantum optical sensors capable of measuring minute changes in magnetic fields down to a single femtotesla that allow free movement of the subject. A unique feature is the capability to record human neuronal activity at single cell resolution during presurgical evaluation of epilepsy patients.

Imaging neuronal activity 3 mm deep in the brain of a behaving mouse. Courtesy by Masashi Hasegawa and Jan Gründemann.

Florian Mormann.